Image source, Illustration of Serenity Stull/Getty Images

-

- Author, Thomas Germain

- Author's title, Technology correspondent, BBC News

- X,

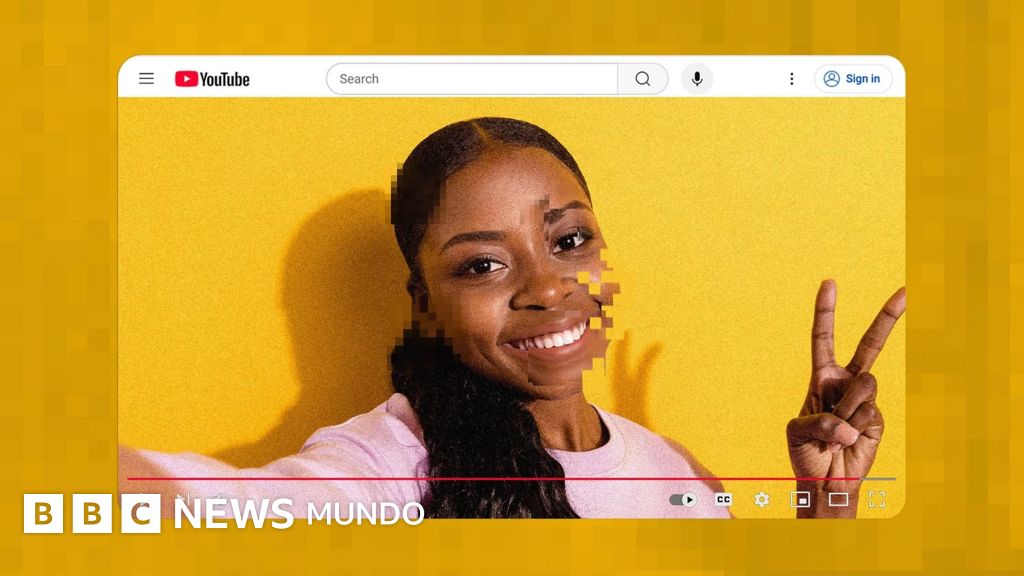

YouTube made it touches with artificial intelligence several videos without notifying your creators or asking permission. While AI intervenes silently in our world, what happens to our connection with real life?

Rick Blessed's face simply did not look good. “I thought: 'My hair looks weird!'” He says, “and the more I looked at me, the more it seemed like I was wearing makeup.”

Blessed has a YouTube channel with more than five million subscribers, where it has created almost 2,000 videos exploring the world of music.

Something seemed strange in one of his last publications, but he could barely perceive the difference. “I thought: 'Am I imagining it?'”

It turns out that. In recent months, YouTube has secretly used artificial intelligence (AI) to retouch users' videos without warning or asking them permission.

Shirt wrinkles seem more defined. The skin is clear in some areas and softer in others. Pay close attention to the ears and you may notice that they deform.

These changes are small, barely visible without you cannot compare them with other videos. However, some youtubers Disturbed say that it gives its content a subtle and unpleasant feeling of being generated by AI.

There is a broader trend at stake. An increasing part of reality is preprocessed by AI before reaching … we. In the end, the question will not be if we can notice the difference, but if it is eroding our links with the world around us.

“It distorts me completely”

“The more I saw it, the more it bothered me,” says Rhett Shull, another popular youtuber musical.

Shull, Blessed friend, began reviewing his own publications and discovered the same strange details.

He published a video on the subject that has accumulated more than 500,000 visualizations.

“If I had wanted this terrible extra retouching, I would have done it myself. But the most serious thing is that it seems generated by AI. I think that distorts me deeply, it distorts what I do and my voice on the Internet. I could erode the confidence my audience has, even if it is a bit. It simply bothers me.”

Image source, Getty Images

Shull and Blessed were not the first to notice the problem.

Complaints on social networks date back to at least June, with users publishing first planes of body parts with a strange appearance and questioning the intentions of YouTube.

Now, after months of rumors in the comments section, the company has finally confirmed that it is modifying a limited number of videos on YouTube Shorts, the function of short videos of the application.

“We are conducting an experiment on some YouTube Shorts that uses traditional automatic learning technology to blur, eliminate noise and improve the clarity of videos during processing (similar to what a modern smartphone does when recording a video),” said Rene Ritchie, head of writing and link with YouTube creators, in a publication in X.

“YouTube is always working to offer the best possible quality and video experience, and will continue to take into account the comments of creators and spectators as we implement and improve these functions.”

YouTube did not answer the BBC questions about whether users will have the option of adjusting their videos.

Imprecise terms

It is true that smartphones Modern incorporate functions that can improve image and video quality. But that is something completely different, according to Samuel Woooley, professor of misinformation studies at the University of Pittsburgh (USA).

“You can decide what you want your phone to do and whether or not to activate certain functions. What we have here is a company that manipulates the content of leading users that is then distributed to the public without the consent of those who produce the videos.”

Woooley argues that the choice of YouTube words feels like an attempt to divert attention.

“I think that using the term 'automatic learning' is an attempt to hide the fact that they used AI due to the concerns about this technology. Automatic learning is, in fact, a subcampus of artificial intelligence,” he says.

The YouTube courage shared additional details in a subsequent publication, drawing a line between “traditional automatic learning” and generative AI, where an algorithm creates completely new content learning patterns in vast sets of data.

However, Woooley states that this distinction is not significant in this case.

Image source, Getty Images

Anyway, this decision is an indication of how AI continues to add additional steps between us and the information and the means we consume, often in ways that would not be noticed at first glance.

“The traces in the sand are a great analogy,” says Jill Walker Rettberg, professor at the Digital Narrative Center at the University of Bergen (Norway):

“You know someone had to leave those traces. With an analog camera, you know there was something in front of the camera because the film was exposed to light. But with the algorithms and AI, what will this do to our relationship with reality?”

A phenomenon that expands

In March 2025, the controversy broke out when they appeared in Netflix apparent remastering with the comedies of the 80s, The Cosby Show y A Different World.

The series are available in high definition, despite having originally recorded on video. The Verge He described the result as a “nightmare disorder with distorted faces, illegible text and deformed funds.”

However, it goes beyond the repetitions of the 80s and YouTube videos. In 2023, it was discovered that Samsung artificially edited photos of the moon taken with its most recent devices.

Subsequently, the company published a blog post detailing the AI behind its lunar photos.

Users of smartphones Google Pixel get an even more shocking function that uses AI to correct smiles.

The “Better Take” tool selects the most attractive expressions of people's faces in a series of group photos, compiling them in a new and attractive image of a moment that never happened in the real world.

The latest Google device, Pixel 10, includes a new function that uses generative the camera to allow its users to zoom up to 100x, far beyond what the camera can physically capture.

As functions like these become more common, new questions arise about what the photos represent.

Image source, Getty Images

What is real?

It is not a new paradigm. Thirty years ago, there was a similar concern about the ravages that Photoshop was going to cause in society.

Decades later, we still talk about the damage of retouncing models in magazines and beauty filters on social networks. Perhaps AI is more of the same, but these trends enhance, according to Woooley.

It is clearly something that is in the minds of Google engineers, owner of YouTube. In addition to the generative the capabilities integrated in the Pixel 10 Chamber, it is also the first telephone to implement new content credentials established by the industry.

These place a digital water mark in the images so that users can identify if they were edited with AI.

But Woooley states that the use of automatic learning to edit YouTube videos without the knowledge of users runs the risk of blurring the limits of online trust.

“This case of YouTube reveals how AI becomes increasingly a medium that defines our lives and realities,” says Woooley. “People already distrust the content they find on social networks. What happens if people know that companies are editing the content from above, without even telling it to the creators themselves?”

Even so, some do not bother YouTube incursion into the modified content with AI.

“You know, YouTube is constantly working on new tools and experimenting,” says Blessed. “They are a leading company in their class, I only have good things to say. YouTube changed my life.”

This is a BBC Future story. If you want to access the original version, in English, do Click here.

Subscribe here To our new newsletter to receive every Friday a selection of our best content of the week.

And remember that you can receive notifications in our app. Download the latest version and act.